LLM Rapid Prototyping: Local vs On-Cloud Deployment

Like many AI enthusiasts over the past few years, I’ve been experimenting with LLMs, RAG workflows, and comparing various models—including top-tier open-weight models. In this post, I’ll share my experience setting up a local development environment versus a fast-prototyping cloud deployment using infrastructure-as-code.

TL;DR

I began with a high-end local setup featuring a consumer-grade CPU and GPU. However, due to costs and scalability limitations, I ultimately sold the machine and switched to a cloud-based solution. Cloud infrastructure offers certain advantages: flexible scaling and overall better value for prototyping and short-term tasks.

LLM Hosting Environment

With my background in backend architecture, I needed both local and cloud environments to be reproducible and easy to set up. I chose Ollama, a fantastic tool that brings Docker-like capabilities to LLM management. You can pull, modify, and push models just like Docker containers. Once a model is pulled, Ollama allows interaction via a CLI or, more practically, its well-designed (though somewhat limited) API. It supports functionalities such as model configuration, streaming, and even image inputs.

For a front-end interface, I use OpenWebUI. It supports multi-user environments, access control, RAG implementation, and flexible integrations with tools like OCR or LLM backends. Naturally, it has full compatibility with Ollama.

Additional tooling includes Docker, GPU drivers, and other supporting infrastructure to run these components.

Local Environment

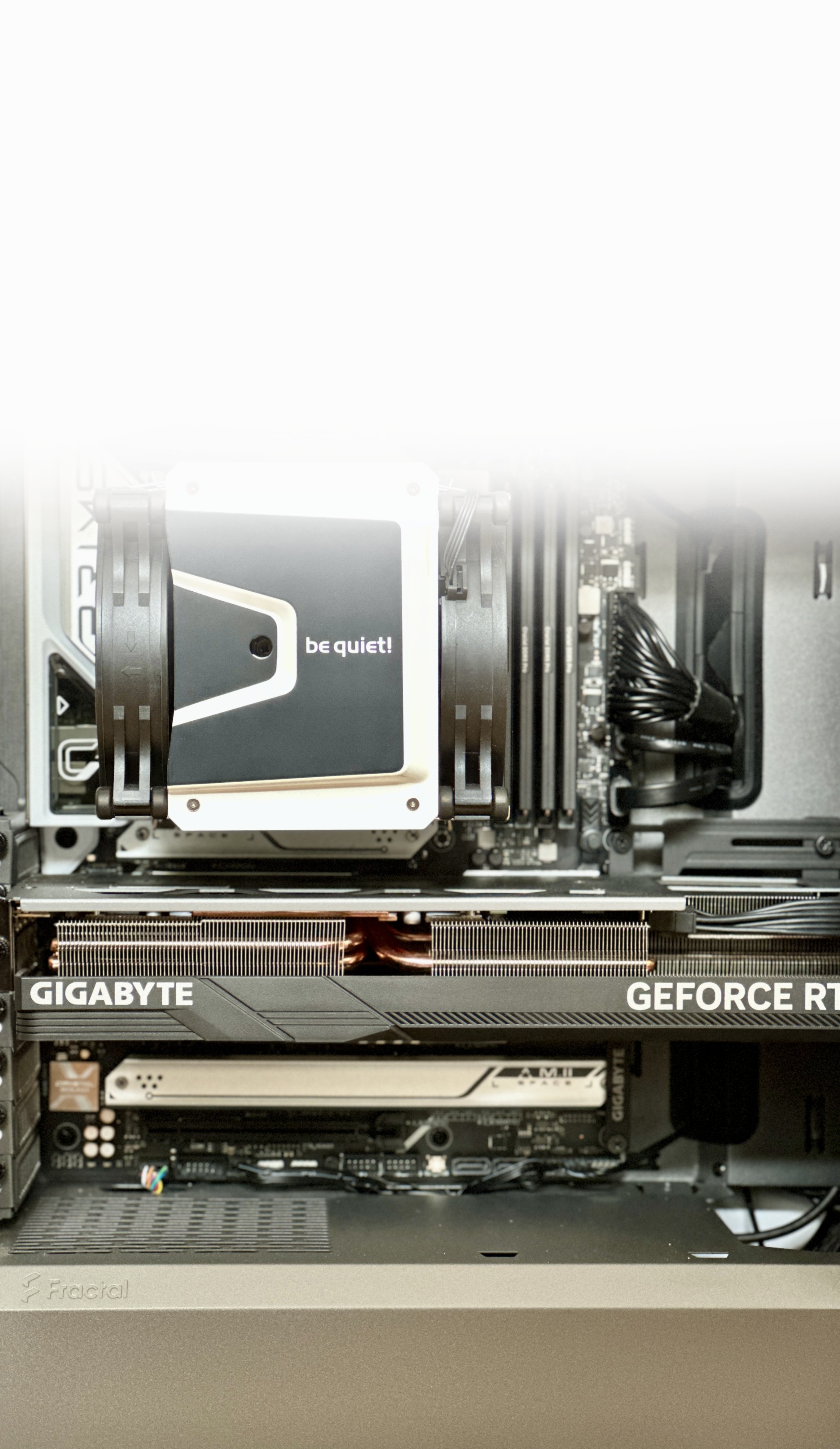

My machine was, at the time, top-tier for consumer-grade AI development:

CPU: Intel Core i9-14900K

GPU: Nvidia RTX 4090

RAM: 128 GB DDR5-5400

Storage: 8 TB NVMe M.2

OS: Dual-boot Ubuntu 20.04 and Windows 11

Hardware cost: ~€3,600 (assembled manually in early 2024)

Estimated power consumption: ~550W under full load

Electricity cost: Assuming €0.30/kWh and 20 hours/week average usage:

→ ~€14.30/month

Amortized cost over 5 years:

→ ~€60/month (hardware) + €14.30 (electricity) = €74.30/month

Cloud-Based Solution

I transitioned to using DigitalOcean for its cost efficiency and developer-friendly infrastructure. Their API allows automation via tools like Terraform. I wrote a Terraform script to provision a single-GPU H100 instance, with persistent storage maintained across instance terminations (see this line).

Instance Cost: ~$4/hour

Setup Time: ~15 minutes

Usage Assumption: 5 hours/week

Estimated Monthly Cost:

→ 5 hrs/week × 4 weeks = 20 hrs/month

→ 20 hrs × $4/hr = $80/month (~€73/month)

Local vs Cloud: Cost and Performance

Spec | Local (14900K + 4090) | Cloud (DO H100 Instance) |

|---|---|---|

GPU | Nvidia RTX 4090 (24 GB VRAM) | Nvidia H100 (80 GB VRAM) |

CPU | Intel i9-14900K (24 threads) | 20 vCPUs |

RAM | 128 GB DDR5 | 240 GiB |

Storage (Boot) | 8 TB NVMe | 720 GiB NVMe |

Scratch Disk | — | 5 TiB NVMe |

Network Transfer | — | 15,000 GiB |